Human Hair Segmentation In The Wild Using Deep Shape Prior

Yongzhe Yan1,2 , Anthony Berthelier1,2 , Stefan Duffner3 , Xavier Naturel 2 , Christophe Garcia 3 and Thierry Chateau 1

1Université Clermont Auvergne 2Wisimage 3LIRIS

Presented at the Third Workshop on CV4ARVR, CVPR 2019, Long Beach

Paper Link, Additional Visual Results, Poster and Explainer Video

Abstract

Virtual human hair dying is becoming a popular Augmented Reality (AR) application in recent years. Human hair contains diverse color and texture information which can be significantly varied from case to case depending on different hair styles and environmental lighting conditions. However, the publicly available hair segmentation datasets are relatively small. As a result, hair segmentation can be easily interfered by the cluttered background in practical use. In this paper, we propose to integrate a shape prior into Fully Convolutional Neural Network (FCNN) to mitigate this issue. First, we utilize a FCNN with an Atrous Spatial Pyramid Pooling (ASPP) module to find a human hair shape prior based on a specific distance transform. In the second stage, we combine the hair shape prior and the original image to form the input of a symmetric encoder-decoder FCNN to get the final hair segmentation output. Both quantitative and qualitative results show that our method achieves state-of-the-art performance on the publicly available LFW-Part and Figaro1k datasets.

Challenge

- Human Hair Segmentation In The Wild: Perform hair segmentation in an unconstrained view without any explicit prior face/head-shoulder detection.

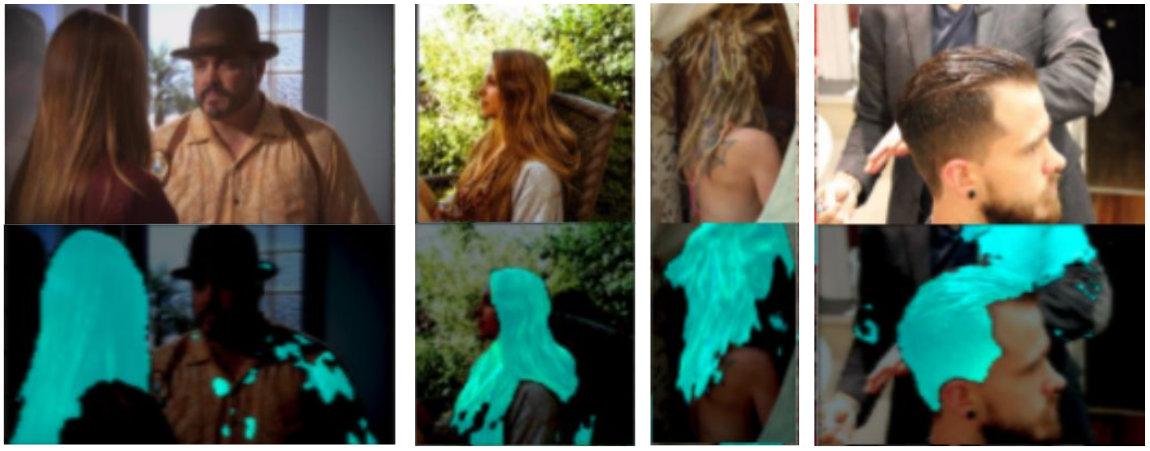

- A problem in practical application: Cluttered Background disturbs deep CNN segmentation especially when the dataset is small, which brings spurious detections such as:

Our Structure

- We propose to construct a shape prior to constrain and guide the hair segmentation.

Our Results

Our method is effective for removing the false positive detection on the cluttered background.